- AiNexaVerse News

- Posts

- 🌟 AiNexaVerse Weekly: Open Source Disruption & Enterprise Scale

🌟 AiNexaVerse Weekly: Open Source Disruption & Enterprise Scale

Welcome back to AiNexaVerse – where cutting-edge AI meets practical implementation. This week's intelligence focuses on the democratization wave: open-source models reaching commercial quality, enterprise platforms removing scaling barriers, and specialized AI applications securing serious funding. The builders are building, and the ecosystem is responding.

📋 This Week's Intelligence Brief

🎯 Topic | 🏢 Company | 💥 Impact Level |

|---|---|---|

HiDream-I1 Open Source | HiDream AI | 🔥🔥🔥 Game-Changer |

Latest AI Research Wave | arXiv Community | 🔥🔥 Significant |

Spear AI Defense Funding | Spear AI | 🔥🔥 Notable |

Claude Rate Limit Boost | Anthropic | 🔥 Important |

Gemini Flash Lite Launch | 🔥 Efficiency Focus |

🎨 HIDREAM-I1: OPEN SOURCE MEETS COMMERCIAL GRADE

The impossible just happened: a 17B parameter open-source image generation model that actually competes with commercial giants. HiDream-I1 achieved state-of-the-art HPS v2.1 scores with full MIT licensing, making premium AI image generation freely available to every developer and startup.

🔍 Why this matters: This obliterates the cost barrier for high-quality AI image generation. Startups can now integrate commercial-grade visual AI without licensing fees or API limits. With 235K+ downloads already, HiDream-I1 is democratizing what was previously exclusive to well-funded companies.

Technical Edge: Sparse Diffusion Transformer architecture, industry-leading GenEval benchmarks

Business Impact: MIT License enables commercial use without restrictions

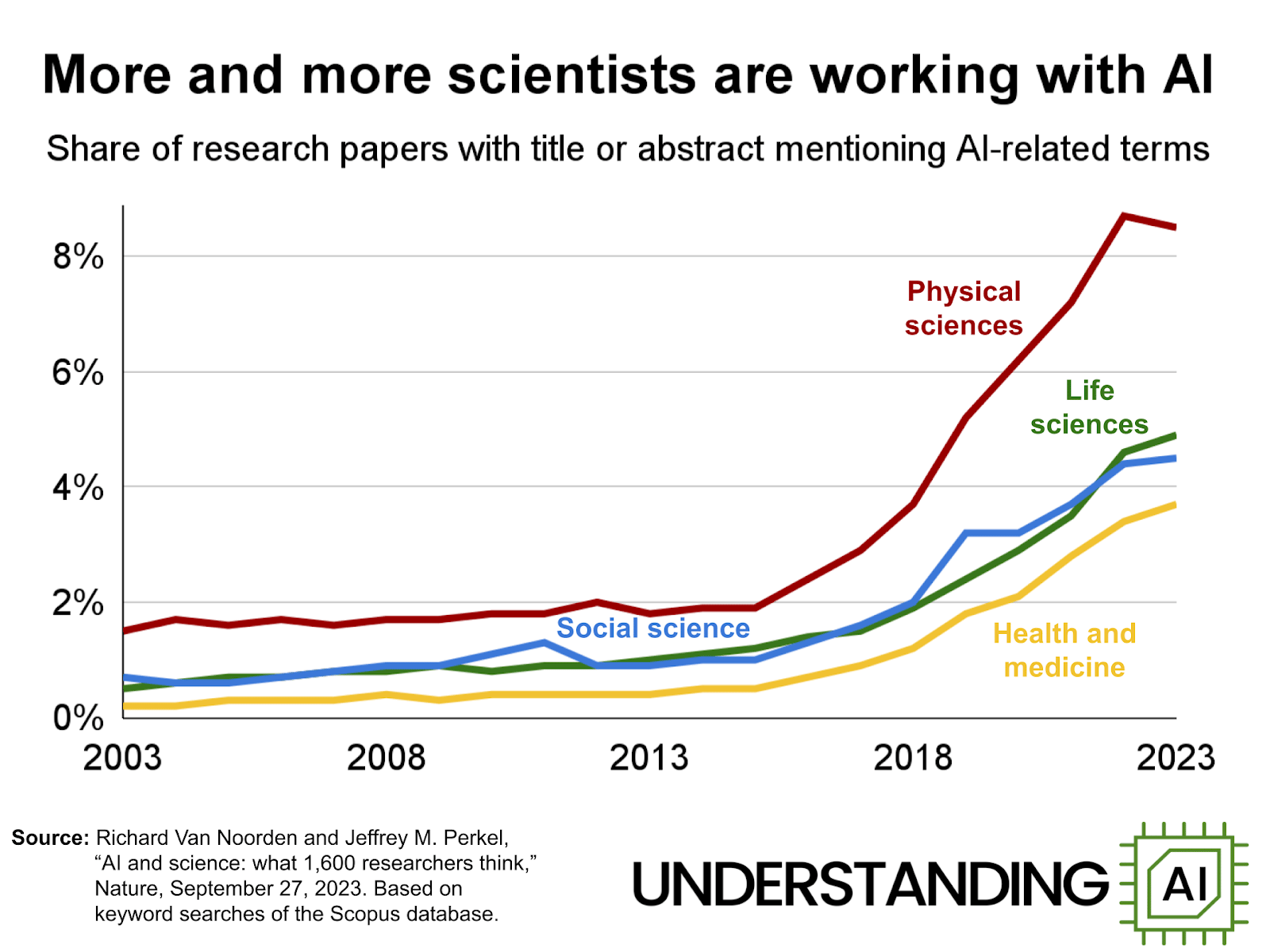

📚 RESEARCH FRONTIER: AI'S NEXT WAVE EMERGES

This week's arXiv publications reveal the research directions shaping AI's next 12 months. Key papers include "AI Research Agents for Machine Learning," breakthrough work on conflicting information processing in vision-language models, and quantum-inspired encoding strategies that could revolutionize model efficiency.

🔍 Why this matters: Today's research papers are tomorrow's production capabilities. The focus on AI research agents suggests we're moving toward AI systems that can conduct their own research and development. For technical leaders, these papers offer a roadmap for anticipating capability advances.

Research Themes: Automated ML research, ethical AI frameworks, multimodal conflict resolution

Timeline Impact: 6-12 months from paper to production implementation

Find out why 1M+ professionals read Superhuman AI daily.

In 2 years you will be working for AI

Or an AI will be working for you

Here's how you can future-proof yourself:

Join the Superhuman AI newsletter – read by 1M+ people at top companies

Master AI tools, tutorials, and news in just 3 minutes a day

Become 10X more productive using AI

Join 1,000,000+ pros at companies like Google, Meta, and Amazon that are using AI to get ahead.

🛡️ SPEAR AI: DEFENSE APPLICATIONS GET SERIOUS FUNDING

U.S. Navy veterans just secured 2.3M in first-round funding plus a 6M Navy contract for AI-powered submarine acoustic data analysis. Spear AI represents the growing trend of specialized AI applications tackling high-value, niche problems with government and enterprise backing.

🔍 Why this matters: While everyone focuses on consumer AI, the real money is in specialized applications. Defense, healthcare, infrastructure – these domains offer massive contracts and recurring revenue. Spear AI's success proves that targeted AI solutions can achieve venture scale in vertical markets.

Growth Plan: Doubling headcount from 40 to 80 employees

Market Strategy: Veterans leveraging domain expertise for AI applications

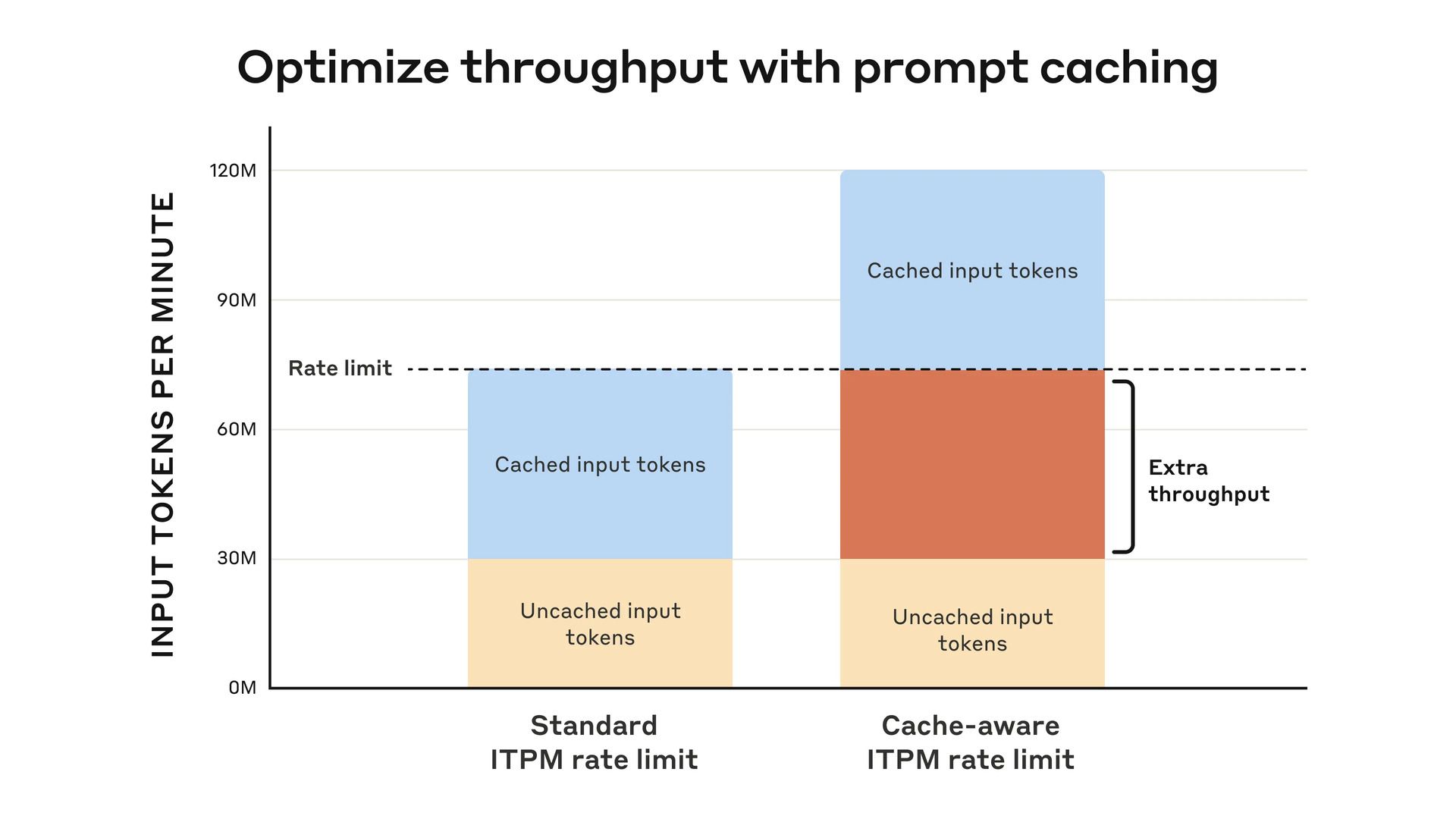

⚡ CLAUDE OPUS 4: ENTERPRISE BARRIERS ELIMINATED

Anthropic quietly but significantly increased rate limits for Claude Opus 4, removing a major scaling bottleneck for enterprise applications. This update enables developers to build production-grade applications without worrying about hitting usage caps during peak demand.

🔍 Why this matters: Rate limits have been the silent killer of AI application scalability. By removing these constraints, Anthropic is signaling enterprise readiness and enabling developers to build with confidence. This move directly challenges OpenAI's enterprise positioning.

Technical Impact: Higher throughput for building and scaling applications

Competitive Advantage: Reduced infrastructure worry for enterprise developers

💡 GEMINI 2.5 FLASH LITE: EFFICIENCY REVOLUTION

Google dropped Gemini 2.5 Flash Lite – a fast, low-cost, high-performance model optimized for price-performance applications. This addresses the critical need for cost-effective AI deployment at scale, particularly for startups running high-volume processing workloads.

🔍 Why this matters: Cost efficiency is the make-or-break factor for AI application profitability. Flash Lite enables startups to process massive volumes without burning through runway. This democratizes access to capable AI for use cases that couldn't justify premium model costs.

Use Cases: High-volume processing, cost-sensitive applications, startup-friendly pricing

Positioning: Direct competition with GPT-3.5 Turbo and similar efficiency-focused models

🎯 THE NEXAVERSE TAKE

This week highlighted AI's democratization accelerating across multiple vectors: open-source models achieving commercial quality, enterprise platforms removing scaling barriers, and specialized applications proving venture viability. The message is clear: AI infrastructure is maturing rapidly, and the competitive advantage is shifting from access to execution.

For builders: the tools are ready, the funding is available, and the markets are hungry. The question isn't whether you can build with AI – it's whether you can ship faster than your competition.

🚀 BUILDING SOMETHING INCREDIBLE?

Tell us about your latest AI integration or which open-source model you're most excited to try. The AiNexaVerse community thrives on shared innovation and rapid experimentation.

Build fast. Ship faster. Stay AiNexaVerse.