- AiNexaVerse News

- Posts

- Former OpenAI Board Member Explains CEO Sam Altman's Firing and Rehiring

Former OpenAI Board Member Explains CEO Sam Altman's Firing and Rehiring

Hello AI Lovers!

Today’s Topics Are:

- Former OpenAI Board Member Explains CEO Sam Altman's Firing and Rehiring

- OpenAI Reveals AI's Use in Global Influence Campaigns

- Meta Uses Your Posts to Train AI: How to Limit Data Sharing

Former OpenAI Board Member Explains CEO Sam Altman's Firing and Rehiring

KEY POINTS

Former OpenAI board member Helen Toner reveals why CEO Sam Altman was fired in November.

The board was not informed about the ChatGPT release in November 2022 and learned about it via Twitter.

Altman was reinstated as CEO less than a week after his firing.

Helen Toner, former OpenAI board member, recently provided insights into the events leading to CEO Sam Altman's firing and rehiring. Speaking on a podcast, Toner detailed communication breakdowns and trust issues.

Toner revealed that the board was not informed about the ChatGPT release in November 2022, learning about it through Twitter. She also noted that Altman did not disclose his ownership of the OpenAI startup fund to the board.

According to Toner, Altman withheld information, misrepresented events, and sometimes outright lied to the board, causing a significant loss of trust. In October, two executives shared negative experiences with Altman, describing a toxic atmosphere and psychological abuse, which led the board to believe Altman was not fit to lead the company toward achieving Artificial General Intelligence (AGI).

Altman was reinstated as CEO less than a week after his firing, following significant turmoil within OpenAI, including resignations and investor pressure. An internal investigation concluded there was a significant breakdown of trust between the prior board and Altman, but the board acted in good faith.

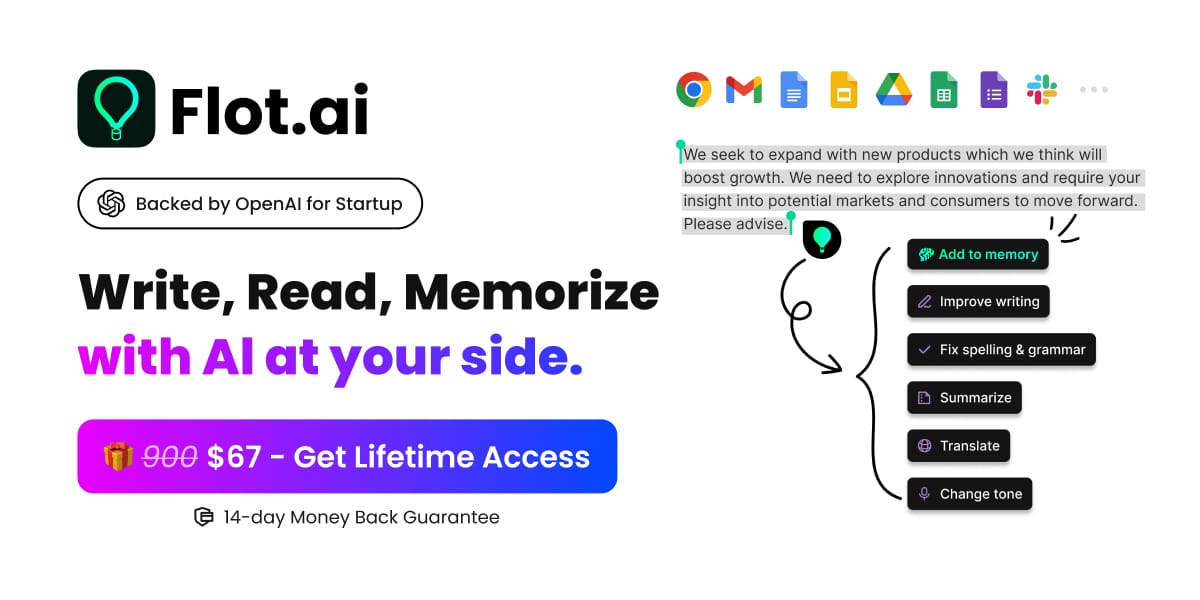

Transform How You Work and Organize Information

Flot is your all-in-one AI assistant, enhancing your writing, speeding up your reading, and helping you remember information across websites and apps.(Available on Windows and macOS)

What Flot Brings:

Seamless integration with any website and app

Comprehensive writing and reading support:

Improve writing

Rewrite content

Grammar check

Translate

Summarize

Adjust tone

Customized Prompts

Save, search and recall information with one click

Subscribe to Oncely LTD & Flot AI Newsletters by clicking.

OpenAI Reveals AI's Use in Global Influence Campaigns

The OpenAI offices in San Francisco

KEY POINTS

OpenAI identified and disrupted five covert online influence campaigns using its AI technologies.

State actors and private companies from Russia, China, Iran, and Israel exploited OpenAI tools.

The AI-generated campaigns had limited impact and failed to gain much traction.

OpenAI announced it had identified and disrupted five global influence campaigns using its AI technologies to manipulate public opinion and influence geopolitics. The campaigns were run by state actors and private companies in Russia, China, Iran, and Israel.

The AI tools were used to generate social media posts, translate and edit articles, write headlines, and debug computer programs. Despite these efforts, the campaigns struggled to build an audience and had little impact.

Helen Toner, a former OpenAI board member, explained that while the technology could aid in creating disinformation, it has not yet led to the feared flood of convincing misinformation. The report highlights the need for vigilance as generative AI continues to evolve.

Meta Uses Your Posts to Train AI: How to Limit Data Sharing

KEY POINTS

Meta is using Facebook and Instagram posts to train its AI models.

Users in Europe received notifications about updates to the privacy policy.

U.S. users are also affected, but opting out is challenging.

Meta has revealed that it uses posts from Facebook, Instagram, and WhatsApp to train its AI models, raising concerns about data privacy. While private messages are not included, other shared content is fair game.

Recent Changes

Recently, European users received notifications about an updated privacy policy as Meta introduces new AI features in the region, compliant with GDPR laws. This change takes effect on June 26, 2024. U.S. users did not receive a notification, but the policy is already in effect, allowing AI features like tagging the Meta AI chatbot in conversations and chatting with AI personas based on celebrities.

Opting Out: A Difficult Process

European and UK users have a "right to object," enabling them to opt out of sharing their data for AI training, though the process is cumbersome. Users in other regions face even more challenges, as the option to opt out is not readily available.

Steps to Limit Data Sharing

The most effective way to stop sharing data with Meta is to delete your accounts. However, there are methods to limit data sharing:

Access Meta Help Center: Visit the Meta help center page to submit requests related to your data.

Submit a Request: Choose from three options:

Access, download, or correct personal information.

Delete personal information.

Submit a concern about personal information used by AI.

These options are narrow and pertain specifically to third-party data. Meta reviews requests based on local laws, making it easier for EU or UK residents to manage their data.

Conclusion

As Meta's AI models evolve, so do the concerns about data privacy. While opting out is challenging, especially for U.S. users, understanding and navigating the available options is crucial for those looking to protect their information.

That was it for this Weeks News, We Hope this was informative and insightful as always!

We Will Start Something Special Within a Few Months.

We Will Tell you more soon!

But for now, Please refer us to other people that would like our content!

This will help us out Big Time!